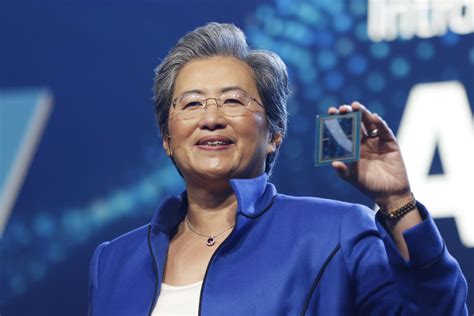

AMD CEO Lisa Su ignited a flurry of discussion in the artificial intelligence market this week, projecting significant growth for the AI chip sector and positioning AMD as a major contender against industry leader Nvidia. Su’s optimistic forecast predicts the AI chip market will reach \$400 billion by 2027, exceeding previous estimates and signaling a potentially seismic shift in the competitive landscape.

AMD CEO’s AI Projections Send Ripples Through the Market

Advanced Micro Devices (AMD) CEO Lisa Su has stirred considerable excitement and debate with her latest projections for the artificial intelligence (AI) chip market. Speaking at a recent technology conference, Su boldly predicted that the AI chip market will surge to \$400 billion by 2027, a substantial increase from earlier forecasts that estimated the market at around \$150 billion. This ambitious outlook has not only bolstered AMD’s stock but also intensified the competition with Nvidia, the current dominant player in the AI chip arena. Su’s statements highlight AMD’s confidence in its ability to capture a significant share of this rapidly expanding market, driven by the increasing demand for AI across various sectors, including data centers, automotive, and consumer electronics.

Challenging Nvidia’s Dominance

For years, Nvidia has held a commanding lead in the AI chip market, particularly in the high-performance computing segment crucial for training and deploying complex AI models. However, AMD is aggressively challenging this dominance with its own suite of AI-focused products, including the MI300 series accelerators. These chips are designed to compete directly with Nvidia’s H100 and upcoming H200 GPUs, offering comparable or even superior performance in certain AI workloads.

“We see AI as the biggest strategic opportunity for AMD,” Su stated during a recent earnings call. “Our focus is on delivering high-performance, energy-efficient solutions that enable our customers to push the boundaries of AI innovation.” AMD’s strategy involves not only developing cutting-edge hardware but also building a robust software ecosystem to support its AI chips. This includes optimizing popular AI frameworks like TensorFlow and PyTorch for AMD hardware, as well as providing developers with comprehensive tools and libraries to facilitate AI application development.

The \$400 Billion Forecast: A Closer Look

Su’s \$400 billion forecast for the AI chip market by 2027 is based on several key factors. First, the increasing adoption of AI across diverse industries is driving unprecedented demand for AI-specific hardware. Companies are investing heavily in AI to automate tasks, improve decision-making, and create new products and services. This is fueling the need for powerful and efficient AI chips that can handle the computational demands of modern AI models.

Second, the complexity of AI models is growing rapidly, requiring ever-increasing amounts of processing power and memory. Training large language models, for example, can take weeks or even months on traditional CPUs, making specialized AI chips essential for accelerating the development and deployment of these models.

Third, the rise of edge computing is creating new opportunities for AI chip vendors. Edge computing involves processing data closer to the source, reducing latency and improving responsiveness. This is particularly important for applications like autonomous vehicles, industrial automation, and healthcare, where real-time decision-making is critical. AMD is well-positioned to capitalize on the edge computing trend with its portfolio of embedded processors and GPUs.

Analysts Weigh In on AMD’s Prospects

Analysts have offered varied perspectives on AMD’s ability to achieve its ambitious goals in the AI chip market. Some analysts are optimistic, citing AMD’s strong product roadmap, growing customer base, and improving software ecosystem. They believe that AMD can capture a significant share of the AI chip market over the next few years, driven by its competitive pricing and performance.

Other analysts are more cautious, pointing to Nvidia’s established market position, extensive software ecosystem, and strong relationships with key customers. They argue that Nvidia has a significant head start in the AI chip market and that it will be difficult for AMD to catch up. However, even these cautious analysts acknowledge that AMD is a credible challenger to Nvidia and that the AI chip market is large enough to support multiple players.

“AMD is definitely making progress in the AI chip market,” said Stacy Rasgon, an analyst at Bernstein Research. “They have a good product and they are gaining traction with customers. But Nvidia is still the dominant player and they are not standing still.”

AMD’s MI300 Series: A Key Weapon in the AI Battle

The MI300 series of AI accelerators is central to AMD’s strategy for challenging Nvidia in the AI chip market. The MI300 series includes both the MI300A and the MI300X, each designed for different AI workloads.

The MI300A is an accelerated processing unit (APU) that combines CPU, GPU, and memory on a single chip. This integrated design allows for high-bandwidth, low-latency communication between the CPU and GPU, making it ideal for workloads that require both general-purpose computing and AI acceleration.

The MI300X is a GPU-focused accelerator designed for training large AI models. It features a massive amount of high-bandwidth memory (HBM) and supports advanced interconnect technologies for scaling to large clusters. AMD claims that the MI300X offers comparable or even superior performance to Nvidia’s H100 GPU in certain AI workloads.

Building a Robust Software Ecosystem

In addition to developing cutting-edge hardware, AMD is investing heavily in building a robust software ecosystem to support its AI chips. This includes optimizing popular AI frameworks like TensorFlow and PyTorch for AMD hardware, as well as providing developers with comprehensive tools and libraries to facilitate AI application development.

AMD’s ROCm (Radeon Open Compute platform) is a key component of its software ecosystem. ROCm is an open-source platform that provides developers with the tools and libraries they need to develop and deploy AI applications on AMD GPUs. AMD is actively working to expand the ROCm ecosystem and to make it easier for developers to use AMD hardware for AI.

Implications for the Broader Tech Industry

AMD’s aggressive push into the AI chip market has significant implications for the broader tech industry. First, it increases competition in the AI chip market, which could lead to lower prices and faster innovation. Second, it gives customers more choice in terms of AI hardware, allowing them to select the best solution for their specific needs. Third, it could accelerate the adoption of AI across various industries, as companies gain access to more affordable and powerful AI chips.

The increased competition could also benefit consumers through more advanced AI-powered products and services. From improved voice assistants to more personalized recommendations, the advancements in AI chips will likely translate to tangible benefits for everyday users.

Challenges and Risks

Despite AMD’s optimistic outlook and strategic initiatives, the company faces several challenges and risks in the AI chip market.

- Nvidia’s Dominance: Nvidia has a well-established market position, a strong brand, and a loyal customer base. It will be difficult for AMD to dislodge Nvidia from its leading position.

- Software Ecosystem: Nvidia has a more mature and comprehensive software ecosystem than AMD. AMD needs to continue investing in its ROCm platform to catch up with Nvidia’s CUDA ecosystem.

- Supply Chain Constraints: The global chip shortage has affected the entire semiconductor industry, and AMD is not immune to these constraints. Supply chain issues could limit AMD’s ability to meet demand for its AI chips.

- Market Volatility: The AI chip market is rapidly evolving, and new technologies and competitors could emerge at any time. AMD needs to be agile and adaptable to respond to changes in the market.

- Customer Adoption: While AMD has secured key partnerships, broad customer adoption of its MI300 series and related software will be crucial for sustained growth. Convincing large enterprises to switch from established Nvidia solutions requires demonstrating clear performance and cost advantages.

AMD’s Strategic Partnerships

To bolster its position in the AI market, AMD has forged strategic partnerships with key players across the industry. These collaborations aim to accelerate the development and deployment of AI solutions based on AMD’s hardware and software. Some notable partnerships include:

- Microsoft: AMD is working closely with Microsoft to optimize its AI chips for Microsoft’s Azure cloud platform. This collaboration includes optimizing AI frameworks and tools for AMD hardware, as well as developing new AI-powered services.

- Oracle: Oracle Cloud Infrastructure (OCI) offers instances powered by AMD’s EPYC processors and Instinct GPUs, providing customers with a range of options for AI workloads.

- Supermicro: Supermicro offers a range of servers optimized for AMD’s AI chips, enabling customers to build high-performance AI infrastructure.

- HPE: HPE is collaborating with AMD to deliver AI solutions for enterprise customers, leveraging AMD’s AI chips and HPE’s expertise in server design and system integration.

These partnerships are critical for expanding AMD’s reach in the AI market and for ensuring that its AI chips are well-supported by leading cloud providers and system integrators.

Financial Implications

AMD’s aggressive push into the AI chip market has significant financial implications for the company. The company is investing heavily in research and development, sales and marketing, and capital expenditures to support its AI initiatives.

AMD expects its AI business to contribute significantly to its revenue growth over the next few years. The company has set a target of achieving \$3.5 billion in AI revenue in 2024, and it expects this number to grow substantially in subsequent years.

The success of AMD’s AI strategy will depend on its ability to execute its product roadmap, gain market share, and manage its costs effectively. If AMD can achieve its goals, it could significantly increase its revenue and profitability.

AMD Stock Performance

Following Su’s optimistic forecast, AMD’s stock experienced a notable surge, reflecting investor confidence in the company’s AI prospects. The stock’s performance underscores the market’s belief in AMD’s potential to capitalize on the burgeoning AI chip market and challenge Nvidia’s dominance. However, analysts caution that the stock’s valuation is highly dependent on AMD’s ability to deliver on its promises and execute its AI strategy effectively.

The Road Ahead

The AI chip market is poised for significant growth in the coming years, and AMD is determined to be a major player in this market. The company’s strategic initiatives, including its focus on high-performance hardware, robust software ecosystem, and strategic partnerships, position it well to compete with Nvidia and other rivals.

However, AMD faces significant challenges, including Nvidia’s dominance, the complexity of building a comprehensive software ecosystem, and the need to manage supply chain constraints effectively. The company’s success will depend on its ability to execute its strategy flawlessly and to adapt to the ever-changing dynamics of the AI chip market.

As AI continues to transform industries and reshape the way we live and work, the competition between AMD and Nvidia will likely intensify, leading to further innovation and advancements in AI technology. The next few years will be critical in determining whether AMD can truly emerge as a dominant force in the AI chip market.

The intensified competition between AMD and Nvidia is expected to drive down prices, making AI technology more accessible to a wider range of businesses and organizations. This, in turn, could accelerate the adoption of AI across various industries, leading to further innovation and economic growth.

Conclusion

Lisa Su’s bold prediction of a \$400 billion AI chip market by 2027 has undeniably shaken up the industry. While Nvidia remains the current leader, AMD’s ambitious goals, coupled with its innovative products and strategic partnerships, position it as a formidable competitor. The coming years will be crucial in determining whether AMD can successfully navigate the challenges and capitalize on the immense opportunities presented by the burgeoning AI market. The outcome of this competition will not only shape the future of the AI chip industry but also have profound implications for the broader tech landscape and the global economy. Ultimately, the advancements in AI technology, fueled by this competition, will likely lead to transformative changes across various sectors, impacting everything from healthcare and transportation to manufacturing and entertainment.

Frequently Asked Questions (FAQ)

-

What is the main point of Lisa Su’s announcement regarding the AI chip market?

- Lisa Su, CEO of AMD, projected that the AI chip market will reach \$400 billion by 2027, a significant increase from previous estimates. This bold prediction signals AMD’s intent to challenge Nvidia’s dominance in the AI chip market and become a major player.

-

How does AMD plan to compete with Nvidia in the AI chip market?

- AMD is competing with Nvidia through a combination of strategies, including developing high-performance AI chips like the MI300 series, building a robust software ecosystem (ROCm) to support its hardware, forging strategic partnerships with key industry players, and offering competitive pricing. The MI300 series is designed to rival Nvidia’s H100 and H200 GPUs in performance.

-

What are the key factors driving AMD’s forecast of a \$400 billion AI chip market by 2027?

- The key factors driving AMD’s forecast include the increasing adoption of AI across diverse industries, the growing complexity of AI models requiring more processing power, the rise of edge computing creating new opportunities for AI chips, and advancements in AI hardware and software technologies.

-

What challenges does AMD face in achieving its goals in the AI chip market?

- AMD faces several challenges, including Nvidia’s established market position and comprehensive software ecosystem, potential supply chain constraints, market volatility, and the need to secure broad customer adoption of its products. Overcoming these challenges will be crucial for AMD to gain significant market share.

-

What are the financial implications of AMD’s push into the AI chip market?

- AMD is investing heavily in research and development, sales and marketing, and capital expenditures to support its AI initiatives. The company expects its AI business to contribute significantly to its revenue growth, targeting \$3.5 billion in AI revenue in 2024. The success of AMD’s AI strategy could significantly increase its revenue and profitability.

Expanded Analysis and Background Information

The semiconductor industry is currently undergoing a profound transformation, driven largely by the insatiable demand for processing power fueled by the rise of artificial intelligence. This has created a highly competitive landscape where companies like AMD and Nvidia are vying for dominance. Understanding the historical context and technological underpinnings of this competition is crucial to appreciating the significance of Lisa Su’s recent statements.

Historical Context: AMD vs. Nvidia

The rivalry between AMD and Nvidia is a long-standing one, dating back to the early days of the personal computer. Both companies have historically competed in the graphics card market, with each seeking to offer superior performance and features at competitive prices. However, with the advent of AI, the focus has shifted from traditional graphics processing to specialized AI accelerators.

Nvidia gained an early lead in the AI chip market by adapting its GPUs for use in training and deploying AI models. Its CUDA platform, a parallel computing architecture, became the de facto standard for AI development, giving Nvidia a significant advantage. AMD, on the other hand, initially lagged behind in the AI space, but has since made significant strides in catching up.

Technological Underpinnings: GPUs and AI Acceleration

GPUs (Graphics Processing Units) are particularly well-suited for AI workloads because they can perform many calculations simultaneously, making them much faster than traditional CPUs (Central Processing Units) for certain tasks. This parallel processing capability is essential for training large AI models, which involve performing trillions of calculations.

AI accelerators are specialized chips designed specifically for AI workloads. These chips often incorporate advanced features such as Tensor Cores (in Nvidia GPUs) and Matrix Cores (in AMD GPUs), which are optimized for performing matrix multiplication, a fundamental operation in AI algorithms.

AMD’s MI300 Series: A Deep Dive

The MI300 series represents AMD’s most ambitious attempt to challenge Nvidia’s dominance in the AI chip market. Both the MI300A and the MI300X are designed to offer superior performance and efficiency for different AI workloads.

- MI300A: This APU (Accelerated Processing Unit) combines CPU, GPU, and memory on a single chip, offering high-bandwidth, low-latency communication between the different components. This integrated design makes it ideal for workloads that require both general-purpose computing and AI acceleration. The MI300A is particularly well-suited for edge computing applications, where real-time decision-making is critical.

- MI300X: This GPU-focused accelerator is designed for training large AI models. It features a massive amount of high-bandwidth memory (HBM), which allows it to store and process large datasets more efficiently. The MI300X also supports advanced interconnect technologies, such as Infinity Fabric, which enables it to scale to large clusters for even more demanding AI workloads.

ROCm: AMD’s Software Ecosystem

ROCm (Radeon Open Compute platform) is AMD’s open-source software platform for AI development. It provides developers with the tools and libraries they need to develop and deploy AI applications on AMD GPUs. ROCm supports popular AI frameworks such as TensorFlow and PyTorch, and it includes a comprehensive set of debugging and profiling tools.

One of the key challenges for AMD is to make ROCm as easy to use and as feature-rich as Nvidia’s CUDA platform. AMD is actively working to expand the ROCm ecosystem and to attract more developers to its platform. The open-source nature of ROCm is a key advantage, as it allows developers to contribute to the platform and to customize it to their specific needs.

The Impact of Geopolitical Factors

The AI chip market is also influenced by geopolitical factors, such as trade tensions between the United States and China. The US government has imposed restrictions on the export of certain advanced AI chips to China, which could impact the competitive landscape. Both AMD and Nvidia are adapting their strategies to navigate these geopolitical challenges.

The Future of AI Chips

The future of AI chips is likely to be characterized by further innovation and specialization. As AI models become more complex, there will be a growing need for specialized chips that are optimized for specific AI tasks. This could lead to the emergence of new types of AI accelerators, such as neuromorphic chips, which are inspired by the structure and function of the human brain.

Detailed Financial Analysis and Projections

To fully understand the significance of AMD’s \$400 billion market projection, it is necessary to delve into a more detailed financial analysis of the AI chip market and AMD’s potential revenue streams.

Current Market Size and Growth Rate:

Various market research reports provide different estimates for the current size of the AI chip market. However, most agree that the market is experiencing rapid growth, with annual growth rates exceeding 30%. This growth is driven by the increasing adoption of AI across various industries, including:

- Data Centers: AI is used in data centers for a wide range of applications, including image recognition, natural language processing, and fraud detection.

- Automotive: AI is used in autonomous vehicles for tasks such as object detection, lane keeping, and navigation.

- Healthcare: AI is used in healthcare for tasks such as medical image analysis, drug discovery, and personalized medicine.

- Consumer Electronics: AI is used in consumer electronics for tasks such as voice recognition, facial recognition, and personalized recommendations.

AMD’s Potential Revenue Streams:

AMD’s revenue from the AI chip market will come from several sources, including:

- Sales of MI300 series chips: AMD expects the MI300 series to be a major revenue driver in the AI chip market.

- Sales of other AI-related products: AMD also sells other products that are used in AI applications, such as CPUs, GPUs, and FPGAs.

- Software and services: AMD also generates revenue from software and services related to its AI chips, such as ROCm and AI training programs.

Factors Affecting AMD’s Market Share:

AMD’s ability to capture a significant share of the AI chip market will depend on several factors, including:

- Product performance: AMD’s AI chips need to offer competitive performance compared to Nvidia’s offerings.

- Software ecosystem: AMD needs to continue building a robust software ecosystem to support its AI chips.

- Customer relationships: AMD needs to build strong relationships with key customers in the AI market.

- Pricing: AMD needs to offer competitive pricing to attract customers.

Scenario Analysis:

To illustrate the potential financial impact of AMD’s AI strategy, we can consider a few different scenarios:

- Base Case: In this scenario, AMD achieves moderate success in the AI chip market, capturing a market share of around 15% by 2027. In this case, AMD’s AI revenue could reach \$60 billion by 2027.

- Optimistic Case: In this scenario, AMD achieves significant success in the AI chip market, capturing a market share of around 25% by 2027. In this case, AMD’s AI revenue could reach \$100 billion by 2027.

- Pessimistic Case: In this scenario, AMD struggles to gain traction in the AI chip market, capturing a market share of around 5% by 2027. In this case, AMD’s AI revenue could reach \$20 billion by 2027.

These scenarios highlight the potential upside for AMD if it can successfully execute its AI strategy. However, they also underscore the risks involved, as AMD’s success is not guaranteed.

Broader Economic Implications

The growth of the AI chip market has significant implications for the broader economy. AI is transforming industries and creating new opportunities for innovation and economic growth. The availability of powerful and affordable AI chips is essential for enabling this transformation.

Job Creation: The AI industry is creating new jobs in areas such as AI research, software development, and data science. As the AI chip market grows, it will likely lead to further job creation.

Increased Productivity: AI can help businesses automate tasks, improve decision-making, and create new products and services. This can lead to increased productivity and economic growth.

Innovation: AI is driving innovation in various fields, such as healthcare, transportation, and manufacturing. The availability of powerful AI chips is accelerating this innovation.

Conclusion: A Transformative Era

The AI chip market is at the forefront of a transformative era in technology and economics. AMD’s aggressive push into this market is a testament to the immense potential of AI. While challenges remain, the company’s strategic initiatives and ambitious goals position it as a key player in shaping the future of AI. The intensified competition between AMD and Nvidia will likely lead to further innovation and advancements, ultimately benefiting consumers, businesses, and the global economy. The next few years will be crucial in determining the long-term impact of this technological revolution and AMD’s role in it.

![Massive Motorcycle Recall: [Manufacturer] Pulls Back Nearly 100K Bikes!](https://generasitekno.com/wp-content/uploads/2025/06/unnamed-file-727-150x150.jpg)